Phase Transitions

GPT-3 shipped two years ago. Its capabilities, and that of descendant language models like OpenAI Codex, astonished me on day one; two years later, I’m still just as astonished.

Earlier this week OpenAI announced ChatGPT, a new variant intended to be used in interactive dialogue. With ChatGPT I find myself astonished all over again.

One delightful discovery is that back-and-forth conversation is a good way to build code. It’s a form of literate programming that Knuth probably never imagined.

I paid for GitHub Copilot the moment I could. Copilot, which builds on top of Codex, is more than just astonishing: it’s useful. Yet, as impressive as Copilot is, playing with ChatGPT makes it clear that Copilot has the potential to do vastly more even today.

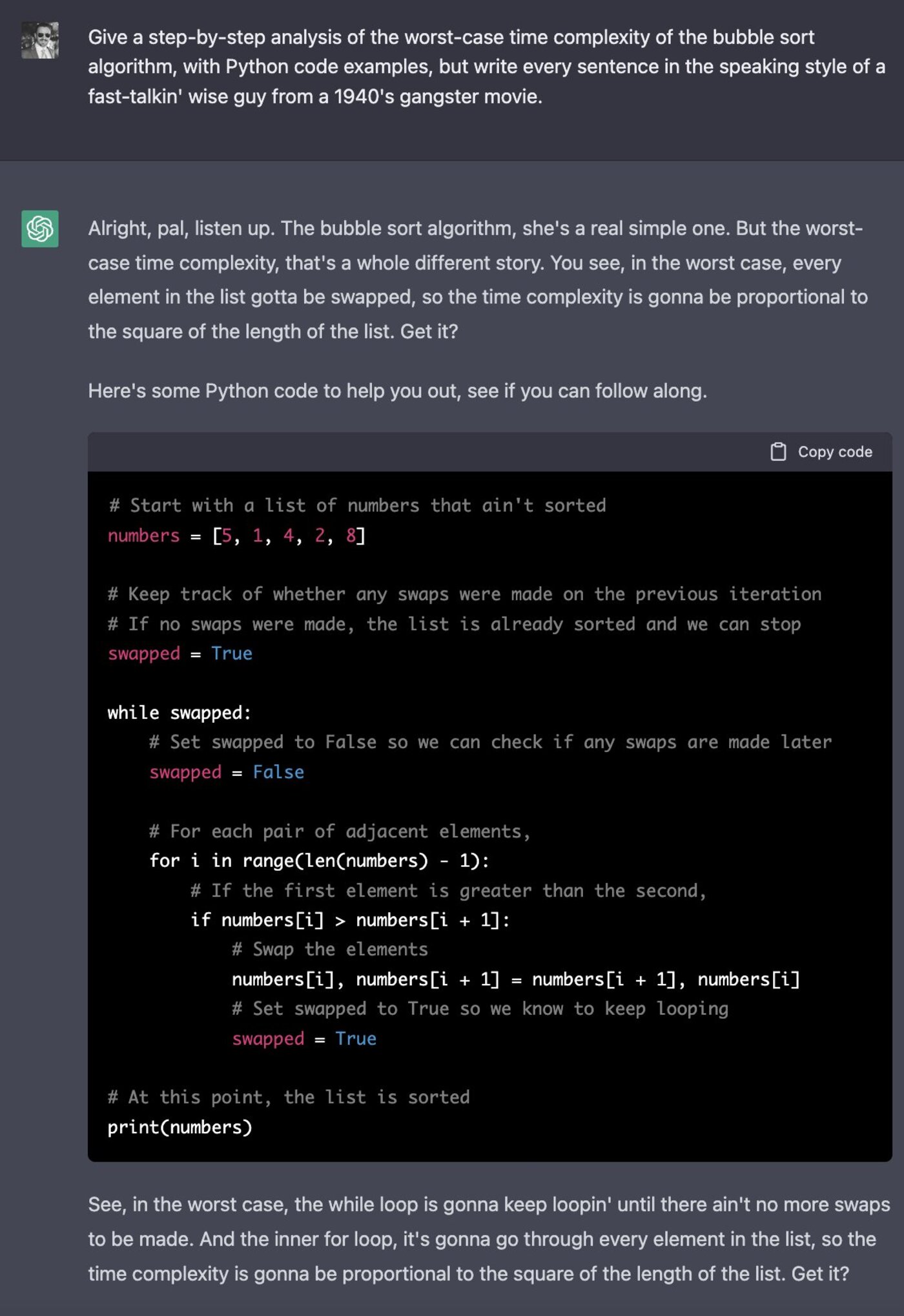

The Internet has done its work and there are too many fun ChatGPT code samples to choose from. The most compelling examples refine code just by talking it over. There are also countless short examples that might have been accomplished with the previous generation of Codex or GPT-3 but that seem to have renewed potency today, like explaining a bug or exploiting a buffer overflow. But if I were to highlight just one example that seems to capture the moment, it might be this complete absurdity from Riley Goodside:

Computers pretending to be gangsters with a knack for complexity theory. That is where we’re at today.

We’re at one of those handful of moments when our industry undergoes a deep and lasting phase transition. It’s easy to draw parallels with previous transitions like the advent of the Web in 1991. Then, as now, a new technology was introduced that collected recent advances into a package that felt wholly new. Even in its first version, that new technology was instantly useful in spite of its obvious flaws. It was easy to imagine a long road of improvements ahead. Most of all: people couldn’t look away. The Web struck like lightning. Large language models? Much the same.

Having said this, there’s also something that feels entirely different to me about this moment. We’re playing with language. Language is primal. It is quintessentially human. It is fire. It’s no accident that GPT-3 was built by an organization whose stated ambition is to develop the world’s first artificial general intelligence.

What lies on the other side of this transition? I don’t know; until we get there, I’ll have to content myself with those fast-talkin’ wise guys. Hopefully they can teach me another thing or two about computer programming along the way.